In the novel Accelerando by Charles Stross, the protagonist Manfred Macx is a power user of smartglasses. These glasses dramatically enhance his ability to interact with the world. Over the course of the book, we learn about the glasses' incredible capabilities, from real-time language translation to dispatching agents to perform complex tasks across the internet. At one point, Manfred has the glasses stolen from him, and it becomes clear that they form a significant part of his cognition.

Offloading parts of your cognition to a device is already commonplace today. Many of us already rely on our smartphones for upcoming events, directions, reminders etc. If you take the idea of having a "silicon lobe" to its logical conclusion, you end up at... a computer being physically integrated into your brain aka Neuralink. This is true in the novel too, with the glasses being "jacked in" to the brain directly, allowing for a higher bandwidth interface and direct sensory manipulation.

In the interim between the iPhone and Neuralink, I predict we will see some kind of Augmented Reality (AR) glasses hit the mainstream for consumers, similar to those in the book (minus the direct neural interface). This device may become ~as integral to your life as Manfreds, where losing them is equivalent to a lobotomy!

This post is a deep dive into the following:

- What are the concrete use cases for AR glasses?

- What are the key challenges facing AR glasses?

- What will the first successful consumer product look like, and when will they arrive?

- What are the key innovations required for the ultimate consumer smartglasses?

What are AR glasses?

AR glasses are a form of wearable technology that superimposes digital information onto the real world. While all AR glasses are smartglasses, not all smartglasses are AR glasses.

Below you can see the 2024 Ray-Ban + Meta smartglasses, one of the first forays into the consumer market. I'll be using them as a reference point throughout this post.

Notice how the arms are quite a bit thicker than standard sunglasses from Ray-Ban. These glasses pack in a huge amount of electronics to provide users with a rich "AI + Audio" experience. Using the integrated camera and spatial audio, you can ask questions, listen to music and use AI to perform other tasks. However, they're lacking the crucial component of AR glasses: a display. Meta isn't stopping there though, and are expected to announce Orion and Hypernova, their true AR glasses, at Meta Connect on September 25 - 26, 2024 1.

Use Cases

In order to usurp the smartphone and become the primary interface with the digital world, AR glasses must provide a significant boost in utility. The use case that everyone is talking about today is AI integration, and for good reason. In my opinion, every human could get a staggering amount of utility from having insert SOTA LLM deeply integrated into their lives. These LLMs are already proving incredibly useful in chatbot form, however they've quickly become bottlenecked - not by IQ, but by context and bandwidth.

- Context: See and hear everything the user does (privacy and regulation notwithstanding, Zuckerberg highlighted this use case at Meta Connect 2023 2).

- Bandwidth: Higher information transfer between the user and the computer (the average person types at a shockingly slow ~40wpm 3).

Perhaps the latest wave of AI innovation will be the catalyst for AR glasses to hit the mainstream. To see what the glasses might be capable of, let's analyse some use cases.

1 - Contextual Overlay

The obvious use case for AR glasses is providing updates and real-time information in a timely and contextual manner. Microsoft has achieved a modicum of success in the enterprise AR market with their HoloLens 2, which is primarily targeting manufacturing and military applications. The glasses provide real-time, hands-free instructions to workers, allowing them to carry out routine tasks more efficiently. Despite being relatively absent from the public consciousness, the HoloLens line has sold over half a million units since its release 4.

2 - Sensory Control and Manipulation

Whilst we won't be able to directly interface with our auditory and visual systems for at least the next 5 years, that doesn't mean to say that AR glasses can't give users a new found control over their senses. One of the most liberating things about the proliferation of high quality Active Noise Cancelling (ANC) headphones is the ability for an individual to filter their audio input. The market has responded well to this ability, and in 2020, Apple's revenue from AirPods sales was larger than the entirety of Twitter, Spotify and Square combined 5.

What would this look like for visual data? Perhaps something reminiscent of "Times Square with Adblock on".

The ability to modify your visual input is a powerful one, and it could be used for more than just blocking ads. For example, during a conversation with a person speaking a different language, you could use a diffusion model to modify their lip movements to match your language. This, plus ANC and a speech-to-speech model, could be an excellent start to the dissolution of language barriers. In order to achieve both of these use cases, the AR would need to be extremely high-resolution and low-latency, which is a significant challenge we'll discuss later.

3 - Personal Assistant Functions

A person's name is to that person, the sweetest, most important sound in any language.

— Dale Carnegie

Whilst this is perhaps a subset of "Contextual Overlay", it's worth highlighting, as it is a compelling use case far beyond what smartphones can provide today. By providing pieces of data throughout the course of a day, everyone could become more charismatic and thoughtful. You'll never have to forget a name or a face ever again.

You can take this further too. According to Carnegie 6, Abraham Lincoln would dedicate considerable time to learning about the interests and backgrounds of people he was scheduled to meet. This allowed him to engage them in conversations about topics they were passionate about, greatly boosting his likeability. With AR glasses, everyone could do this effortlessly (much to the chagrin of those of us who have expended considerable effort practicing this). This is on top of the more obvious personal assistant utilities! However, for this to be totally effective, the glasses would need to serialize everything you ever see or hear, which is quite the social and regulatory challenge.

Other Uses

The outlined use cases above are just the tip of the iceberg. In Accelerando, the glasses also perform a variety of futuristic tasks, such as:

- Dispatching AI agents to perform complex analysis across the internet

- Direct neural interfacing for higher bandwidth communication

- Personalized vital sign monitoring

Even without these capabilities, by simply combining contextual overlay, sensory control and personal assistant functions, we can already see the immense value that AR glasses could provide to users within the next ~3 years. Let's dive into the technical challenges that must be overcome to make this vision a reality.

Physical Constraints

In order to better understand the technical challenges, let's first establish some axioms based on the physical constraints of the glasses.

Weight

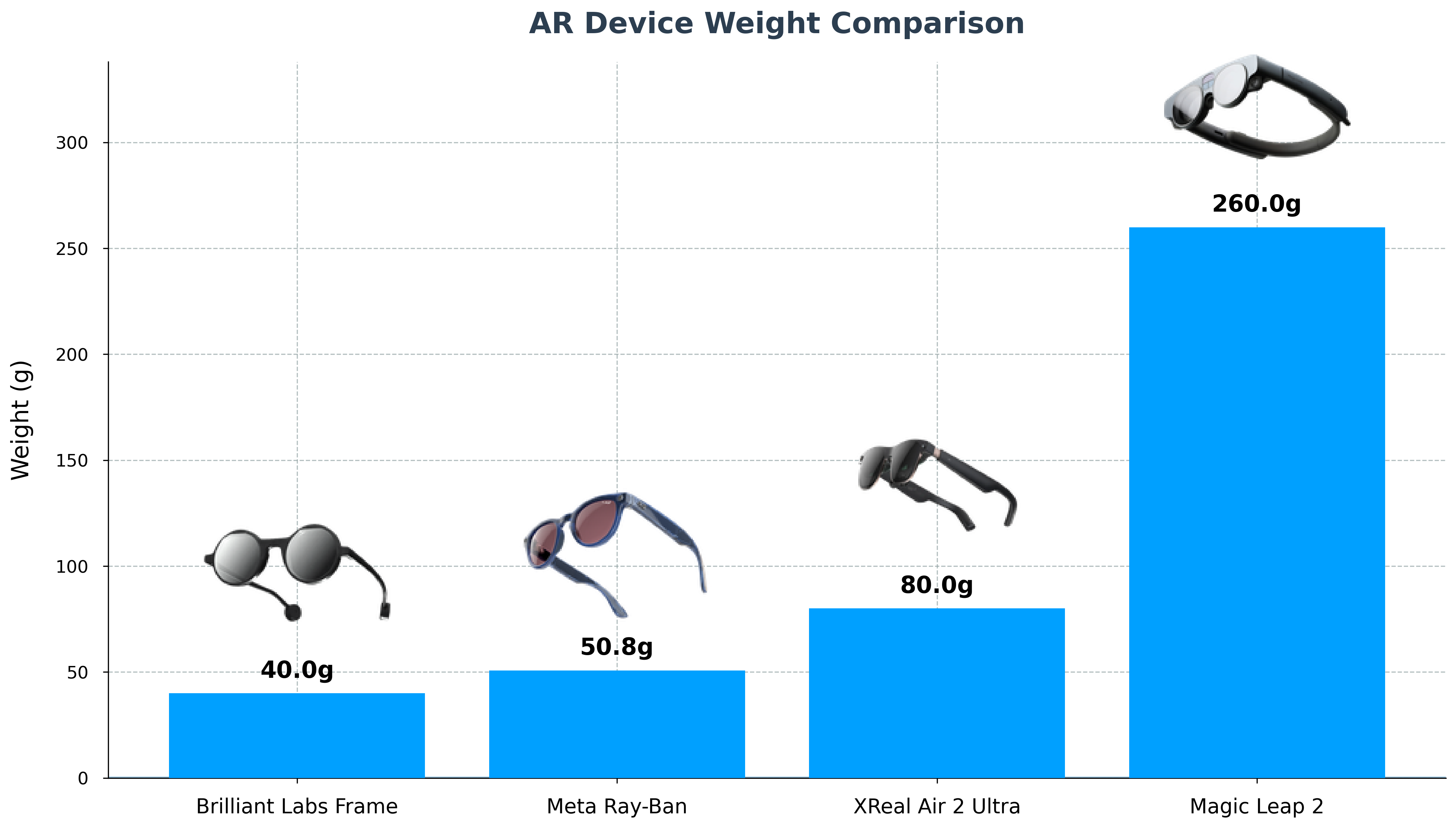

There is surprisingly little literature on the acceptable weight of consumer glasses, so let's look at some current products on the market.

Anecdotally, it seems like the Meta smartglasses are light enough for all day wear, with the XREAL Air 2 Ultra not being too far off. Given the potential utility of the glasses, I think using 75g as an upper bound is reasonable. This also aligns with Meta's rumoured Hypernova glasses we mentioned earlier, which reportedly sit at ~70g 7.

Volume & Battery Life

Another concrete axiom we can build off of is volume. The shape of the human head is going to stay consistent for the foreseeable future, and whilst there is some leeway to be found in the flexibilty of social dynamics, we can expect the form factor (and therefore volume) of the glasses to be approximately similar to glasses today. To calculate a baseline volume, I went to the closest demo location and measured the Meta smartglasses with calipers.

From my measurements, the volume of each arm is approximately , so for both. If we naïvely used the entire volume of a single arm as a battery (and allocate the rest to electronics), and assuming we use a SOTA battery with at a voltage of , we can calculate the maximum possible battery life of any smartglasses as follows:

is approximately half of the battery life of a modern smartphone, but is an order of magnitude larger than the battery that currently ships in the Meta smartglasses highlighted below (). To put it simply, if we want 8 hours of battery life, the glasses are limited to ().

Maximising battery life is absolutely critical for AR glasses, and it's part of what makes them such an enticing challenge. It requires a full stack solution, from efficient software to efficient hardware to efficient power management. From the above teardown, we can see that Meta hasn't exactly maximised volume usage, so I expect that later generations of glasses could have significantly longer battery life. Perhaps like in aeroplanes and electric cars, we will see power sources being used as structural components in the glasses to increase volume utilisation.

Technical Challenges

On top of the physical constraints, we have to deal with the following technical challenges:

- Near Eye Optics

- Form factor

- Compute requirements

- Heat dissipation

- What does it look like when it's off?

- Eye glow

- Efficient SLAM

- Accurate eye, facial & hand tracking

We won't explore all of these challenges in this post, but progress is being made everywhere! Let's take a look at the most significant challenge: near eye optics.

Near Eye Optics - The Fundamental Challenge

Success of smartglasses in a consumer acceptable form factor begins & ends with near eye optics.

— Christopher Grayson, 2016

The above quote seems to be the underlying truth behind the lack of true consumer AR glasses. Therefore, we will devote significant time and energy to understanding the two key components of near eye optical systems and the advances required.

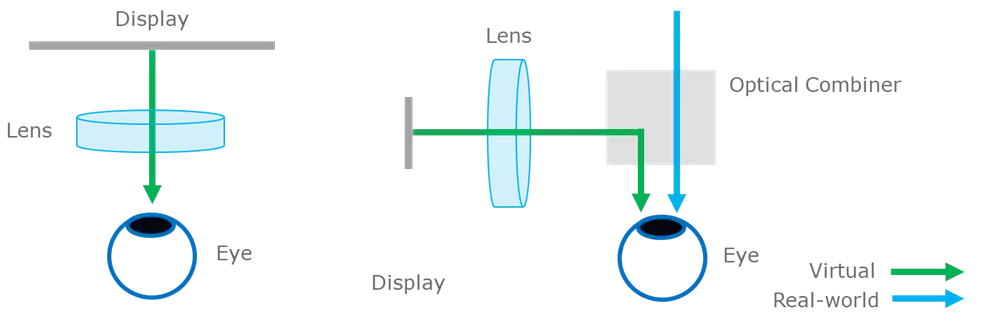

The above diagram from Radiant Vision Systems 8 demonstrates why AR optics are much more challenging than VR. On the left hand side, you can see the simplicity of a VR optical system - the light from a display is fed directly into the eye with lenses for magnification. On the right hand side, you can see the AR optical system, with a compact display offset from the eye. This requires a complex optical combiner to overlay the digital image onto the real world.

Why are the optics so challenging?

Karl Guttag (A legend in the AR space), frequently comments that AR is many orders of magnitude more difficult than VR. The two major bottlenecks in AR optics that require significant advances are the underlying display technology for image generation, and the optical combiner for merging real-world and digital imagery into a single view.

To start to get a sense of the challenges, let's break down the requirements for the display:

- Small enough to fit into the glasses' form factor (order of magnitude smaller than a VR display)

- Not placed directly in front of the eye, but offset with the light routed to the eye

- Positioned close to the eye, so the light must be heavily modified for the eye to focus on it

- Extremely bright ( (nits) to eye ideally), to compete with sunlight and massive losses during transmission

- High-resolution (to cover a ~100° field of view (FoV) with human eye acuity of 1 arcminute, ideally 6K6K resolution is required for each eye 9. This equates to roughly 1µm pixel pitch)

- Low power consumption

These stringent requirements are compounded by the fact the optical combiner will inherently degrade the light from both the real world and the display!

Optical Combiner

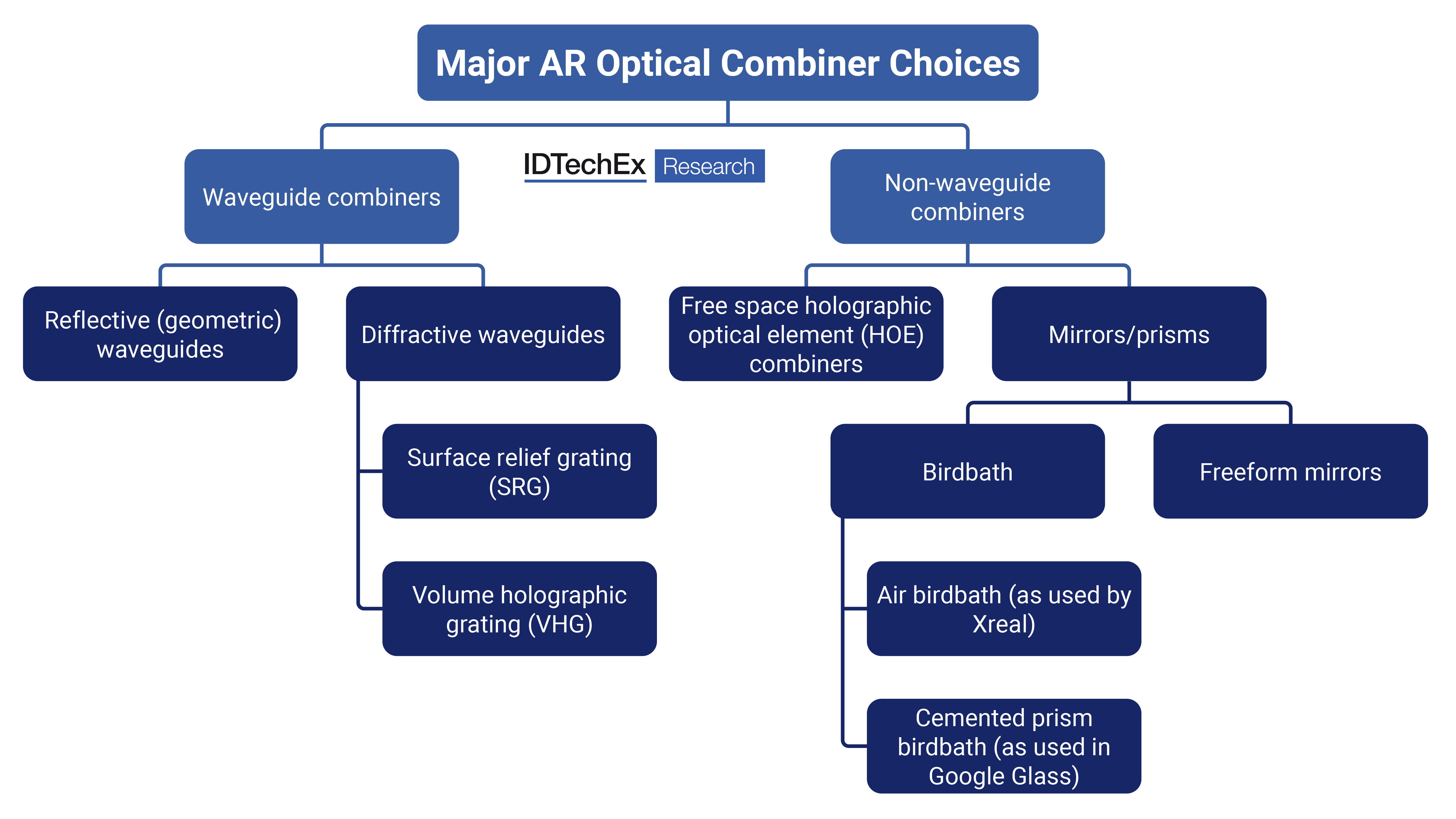

How do we actually combine the light from the two sources? Below you can see a map from IDTechEx of all optical combiner methods:

As you can see, optical combiners are divided into waveguides and non-waveguides. I'm not going to discuss non-waveguides in this post, as from my research it seems like waveguides are going to be the technology of choice for the first generation of consumer AR glasses.

Waveguides

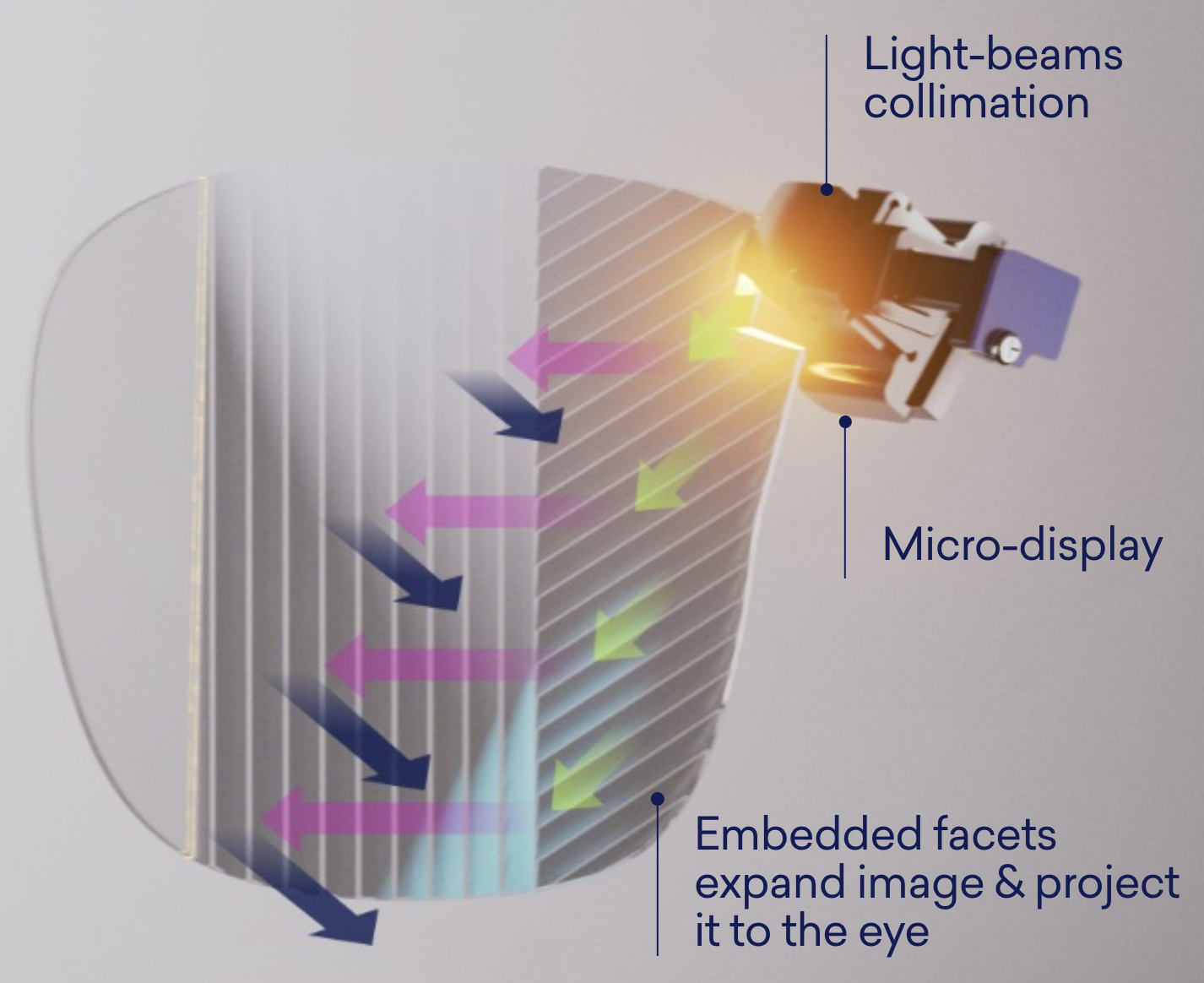

The function of a waveguide is fundamentally quite simple - guide the light from the display into the viewer's eye. Waveguides are usually defined by their underlying mechanism: diffraction or reflection. Whilst diffractive waveguides have been the dominant technology up until now, I believe reflective waveguides are the future of AR glasses due to their superior efficiency and image quality. For more information on waveguides, I highly recommend reading this post by Optofidelity 10.

Above you can see a diagram of a reflective waveguide from Lumus Vision. Lumus Vision is an extremely interesting company that has pioneered reflective waveguides. The light is generated by the micro-display, and expanded and directed into the eye by the waveguide through a series of transflective mirrors. Lumus has been working on reflective waveguides for many years, but they have proved to be extremely difficult to manufacture at scale. However, Lumus has recently renewed its partnership with the glass manufacturing giant Schott to manufacture their waveguides at a new facility in Malaysia. This is a significant step forward for the technology, and could pave the way for the first generation of consumer AR glasses.

Display Technology

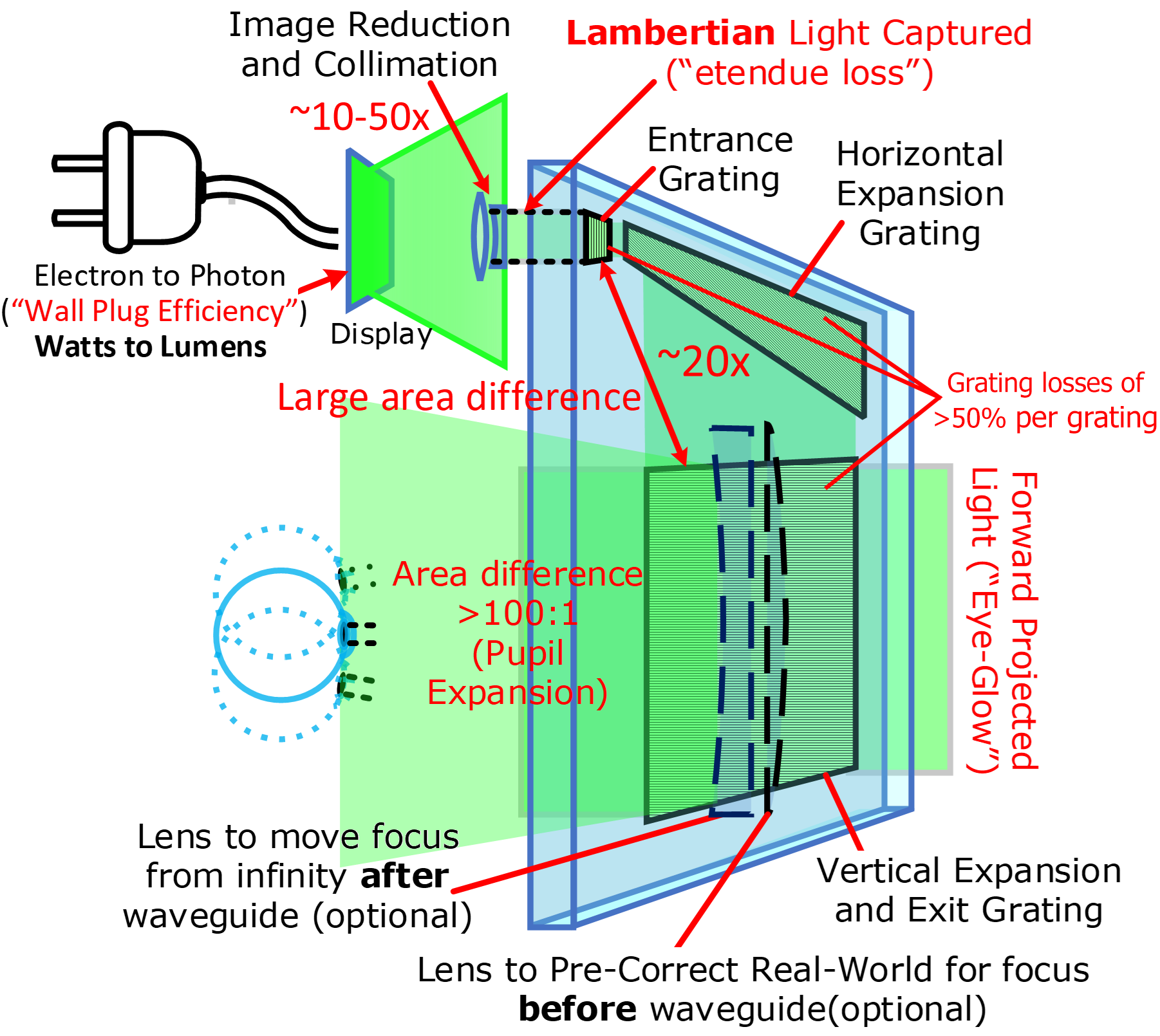

There are a plethora of display technologies available, however only a limited subset are applicable to AR glasses. This is because the losses in a waveguide based system are astronomical, as demonstrated in the diagram below from Karl Guttag 11.

In order for a suitable amount of nits to reach the eye, the display must be capable of generating a huge amount of light. For this reason, Liquid Crystal on Silicon (LCOS) and MicroLED are the most promising display technologies for AR glasses. Many industry experts see MicroLED as the future of AR displays, with companies like Apple and Meta investing heavily in the technology. Read more about MicroLED in this excellent article by Karl Guttag 11.

Compute

AR glasses are a full stack problem, and the compute requirements are variable and intense. Let's break down the compute workload into its core components:

- Simultaneous Localization and Mapping (SLAM): Understanding the environment

- Neural Networks: Running a multitude of AI models for different tasks

- Rendering: Meshing the real world and digital world together

- Transmission: Funneling data to and from the glasses

- Other: Everything else a standard smartphone does

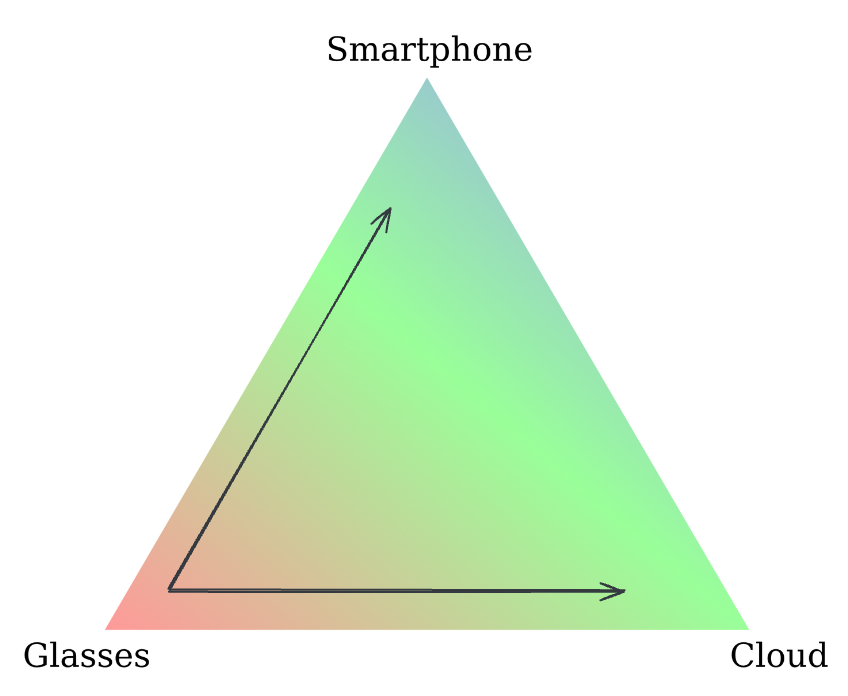

Offloading as much compute as possible to auxiliary devices is key to the success of AR glasses, for both power and performance reasons. Most humans carry around a supercomputer in their pocket, and we can push even more intensive workloads to the cloud, much like Meta is already doing for their current generation of smartglasses.

Each task has to be carefully placed somewhere on this unconjoined triangle of success, trading off latency, power usage and compute requirements. For the neural network use case, using a Router LLM 12 to evaluate query difficulty and selecting the most appropriate device for the workload seems like an interesting avenue.

Transmission

In order to leverage the two external compute sources, you will need to have a high bandwidth communication channel. In the cloud case, this will obviously be done via WiFi or cellular networks. However, for the glasses-to-phone connection, more care must be taken in selecting the communication protocol. Bluetooth 5.0, whilst ubiquitous and low power, has an extremely limited bandwidth of 2Mbps, which is far from sufficient for AR glasses. Ultra Wideband (UWB) 13 could be the answer, and has been shipping in the iPhone since 2019, albeit purely for real-time location not data transfer. With a theoretical transfer rate of 1Gbps, it may be the solution that AR and other high bandwidth peripherals are looking for. Meta has been filing numerous patents relating to UWB (e.g 20240235606), so we may see this technology in their glasses soon.

Tomorrow's AR Glasses

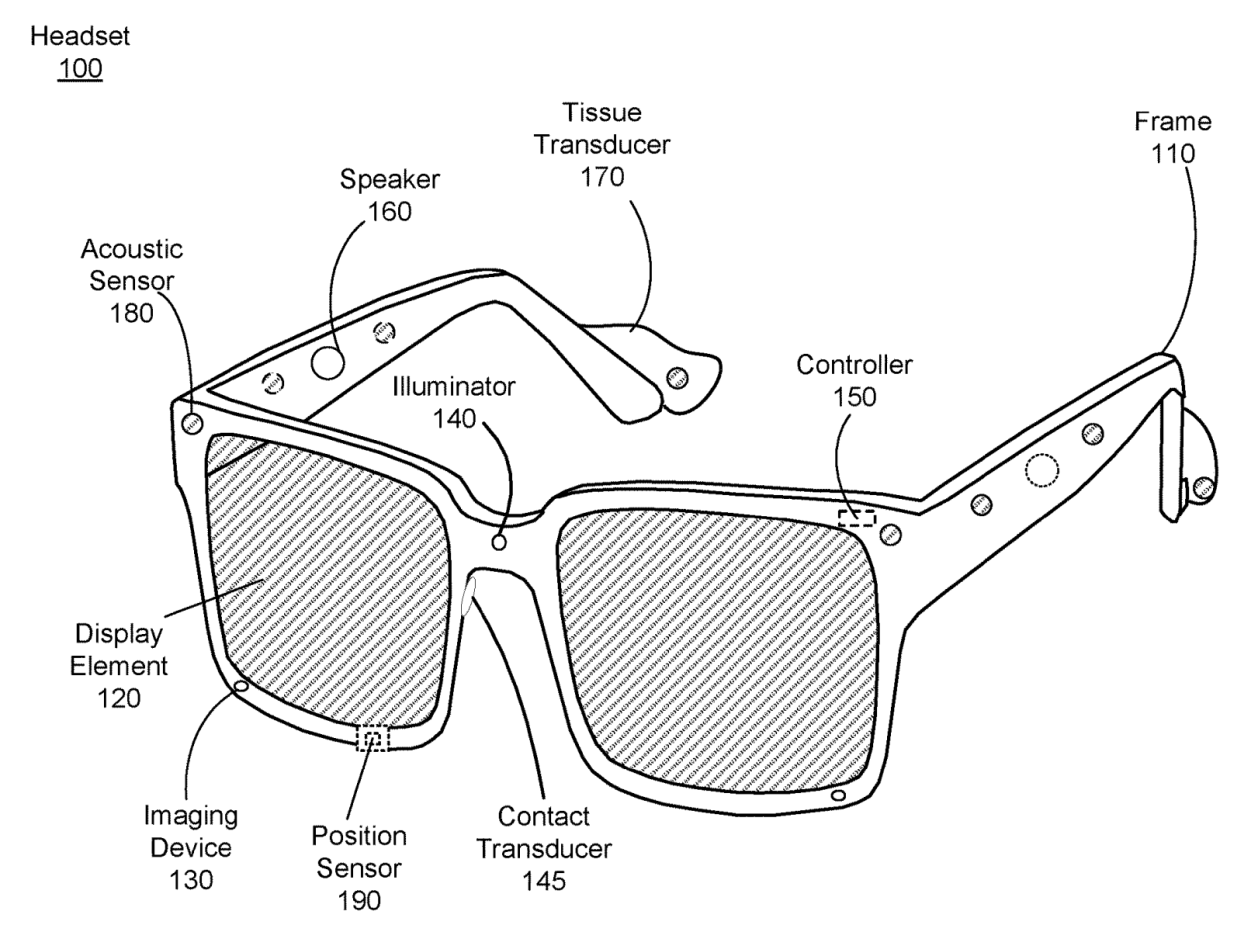

The AR glasses we get in the next ~3 years will not be the ultimate form. In order to rival Manfred's glasses in Accelerando, we will need better specs. The above schematic is taken from one of Meta's most recent patents 14, and demonstrates some interesting innovations such as transducers in both the nose bridge and arms for more immersive spatial audio.

In my opinion, the most salient difference between the first and final generation of AR glasses will be the display technology, particularly the resolution and FOV. As the visual fidelity of the display approaches that of the human eye, the line between the real and the virtual will become blurred, then vanish entirely. This may arrive sooner than you think, with commerical micro displays already hitting 4K4K resolution @ ~3µm pixel pitch 15.

Assembling a team

If we were to assemble a team to build the ultimate AR glasses, it would look something like this:

- Karl Guttag: AR industry veteran, from whom much of my knowledge is derived

- Shin-Tson Wu: Dr Wu. and his progeny will be the creators of the ultimate AR display

- Oliver Kreylos: Who thoroughly dashed my "lasers are the answer" theory

- Thad Starner: Original Google Glass team

- Bernard Kress: Author of the book on AR optics 16, currently at Google

Conclusion

From all my research, it seems like Meta is well positioned in this market. I would not be surprised to see exponential adoption if they can deliver on their 2028 roadmap. With the recent advancements in AI, the value proposition for AR glasses has gone from exciting to essential. With developments from Lumus and Meta's recent patent filings, it seems like this technology may finally be approaching its "iPhone moment" - provided the near eye optics problem can be solved.

Once the "iPhone moment" is reached, there will be a plethora of regulatory and social challenges to overcome, as highlighted in this excellent SMBC comic. In spite of these challenges, I'm looking forward to the future of AR glasses, and the startups and innovations that will come with them.

TLDR: Long $META.

I'm grateful to Mithun Hunsur, Madeline Ephgrave & Benjamin Perkins for their feedback on this post.

Footnotes

-

https://www.roadtovr.com/report-meta-ar-glasses-orion-connect-2024/ ↩

-

https://www.buildwagon.com/What-happened-to-the-Hololens.html ↩

-

https://www.acumenfinancial.co.uk/advice/airpods-revenue-vs-top-tech-companies/ ↩

-

https://www.rfpmm.org/pdf/how-to-win-friends-and-influence-people.pdf ↩

-

https://www.uploadvr.com/meta-hud-glasses-wont-be-ray-bans/ ↩

-

https://www.radiantvisionsystems.com/blog/ride-wave-augmented-reality-devices-rely-waveguides ↩

-

https://www.optofidelity.com/insights/blogs/comparing-and-contrasting-different-waveguide-technologies-diffractive-reflective-and-holographic-waveguides ↩

-

https://kguttag.com/2023/03/12/microleds-with-waveguides-ces-ar-vr-mr-2023-pt-7/ ↩ ↩2

-

https://image-ppubs.uspto.gov/dirsearch-public/print/downloadPdf/20240118423 ↩

-

https://kguttag.com/2024/04/20/mixed-reality-at-ces-ar-vr-mr-2024-part-3-display-devices/ ↩